DeepSeek's first-generation

DeepSeek's first-generation reasoning designs, attaining efficiency equivalent to OpenAI-o1 across math, code, and

thinking tasks.

Models

DeepSeek-R1

Distilled models

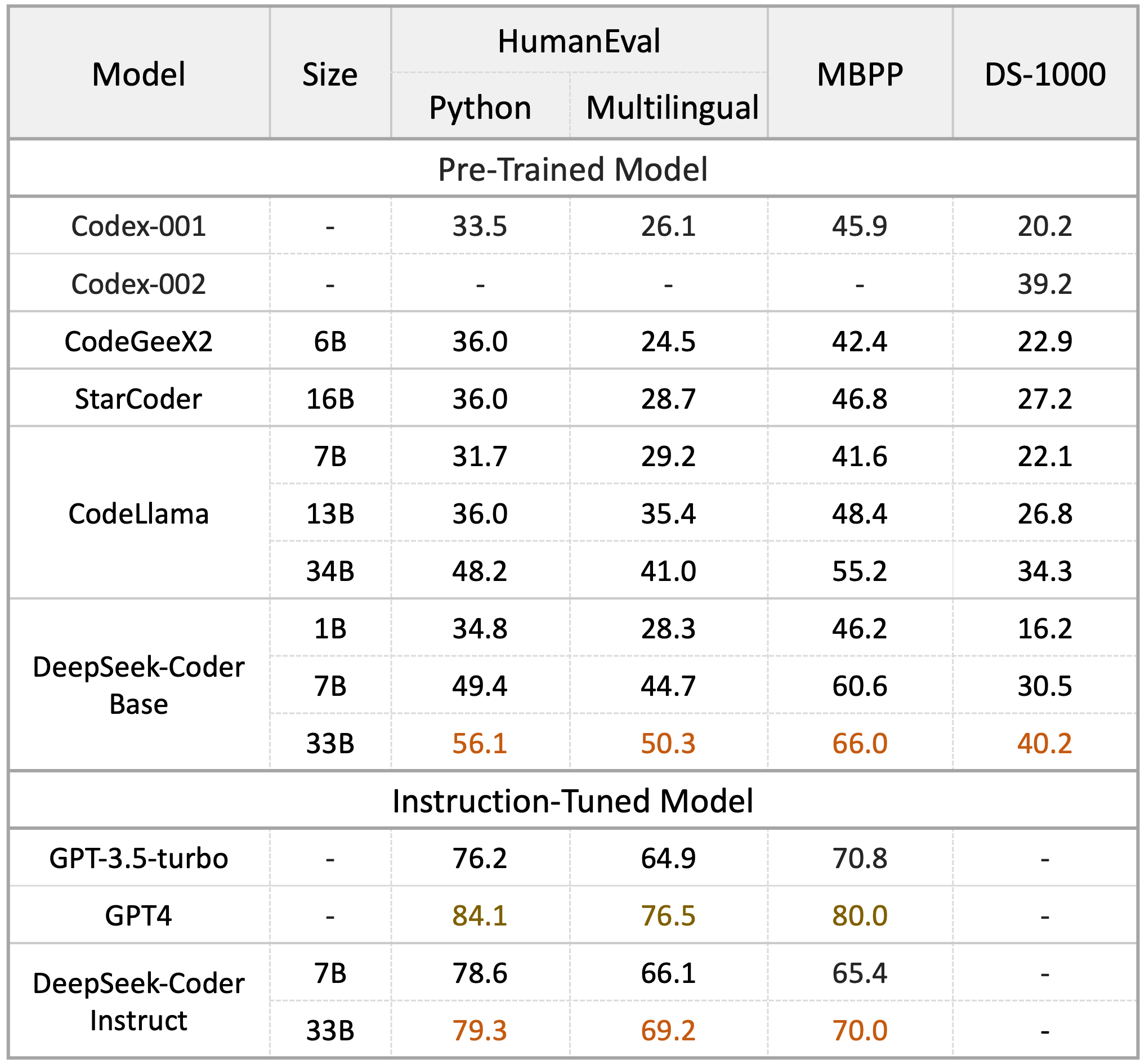

DeepSeek team has shown that the

thinking patterns of larger models can be

distilled into smaller models, leading to better

efficiency compared to the

thinking patterns found through RL on small models.

Below are the designs developed through

fine-tuning against

numerous dense designs commonly

utilized in the research neighborhood utilizing reasoning information

produced by DeepSeek-R1. The

assessment results show that the distilled smaller sized thick

designs perform exceptionally well on criteria.

DeepSeek-R1-Distill-Qwen-1.5 B

DeepSeek-R1-Distill-Qwen-7B

DeepSeek-R1-Distill-Llama-8B

DeepSeek-R1-Distill-Qwen-14B

DeepSeek-R1-Distill-Qwen-32B

DeepSeek-R1-Distill-Llama-70B

License

The

design weights are accredited under the MIT License. DeepSeek-R1 series assistance

business use, permit any adjustments and

derivative works,

consisting of, but not

restricted to,

distillation for

training other LLMs.

Author

Author